Now that we have control over the root filesystem, lets start looking into networking. At the end of this article, we want to be able to access the internet from the uVM & be able to access components within the uVM from the host.

Table of contents

Open Table of contents

The basics

If you look at the getting started pages for Firecracker, they demonstrate how to set up basic networking for a single microVM instance:

- Enable IPv4 forwarding on your host

- Creating a TAP device to capture all the packets that should be forward into the uVM

- Creating an iptables rule to accept all packets forwarded from the tap device to the host interface

- Creating an iptables rule to accept all packets based on their connection state (via conntrack)

- Creating a iptables NAT rule to “masquerade” traffic leaving the host interface

- Bringing up networking within the guest

Enable IPv4 forwarding on the host

The simplest step, we need to enable IPv4 forwarding on your host so that it will alloow us to forward packets to different network segments. Essentially this allows the Linux kernel to act as a router, forwarding packets to our uVM’s tap device.

sudo sh -c "echo 1 > /proc/sys/net/ipv4/ip_forward"Creating a TAP device

Firecracker creates a virtio network device that’s backed by a TAP device on the host. It emulates a network device being able to exchange L2 frames between the guest and a host-side tap device, which allows the microVM to communicate with the host.

sudo ip tuntap add dev tap0 mode tap

sudo ip addr add 10.200.0.1

sudo ip link set dev tap0 upAccept all packets coming from the tap device

On your host, there’s likely a single interface that’s responsible for

connecting to the internet called something like eth0 or ens4. We need to

accept all packets that are being sent from our tap device to our host

interface. We can do this via an iptables rule

sudo iptables \

-t filter \

-A FORWARD \

-i tap0 \

-o ens4 \

-j ACCEPTIn plain english, on the filter table (-t filter), append a rule into the

forwarding chain (-A FORWARD) so that all packets coming from tap0

(-i tap0) and going to ens4 (-o ens4) are accepted (-j ACCEPT)

Accept packets on connection state

The above rule works for packets leaving the guest going towards the internet, but what about the reverse? We don’t want to allow all packets to enter the uVM, we really only want connections that the guest started to be allowed. We can do this via conntrack which is a module in iptables. It saves connection state and allows making routing decisions based on that state.

In our case, we want to blanket allow any connection coming from anywhere, going to anywhere to succeed if its part of an already open connection or related to one. This gives us the following rule

sudo iptables \

-t filter \

-A FORWARD \

-m conntrack \

--ctstate RELATED,ESTABLISHED \

-j ACCEPTIn plain english, on the filter table (-t filter), append a rule into the

forwarding chain (-A FORWARD) so that all packets for a connection with

conntrack state RELATED or ESTABLISHED

(-m conntrack --ctstate RELATED,ESTABLISHED) are accepted (-j ACCEPT)

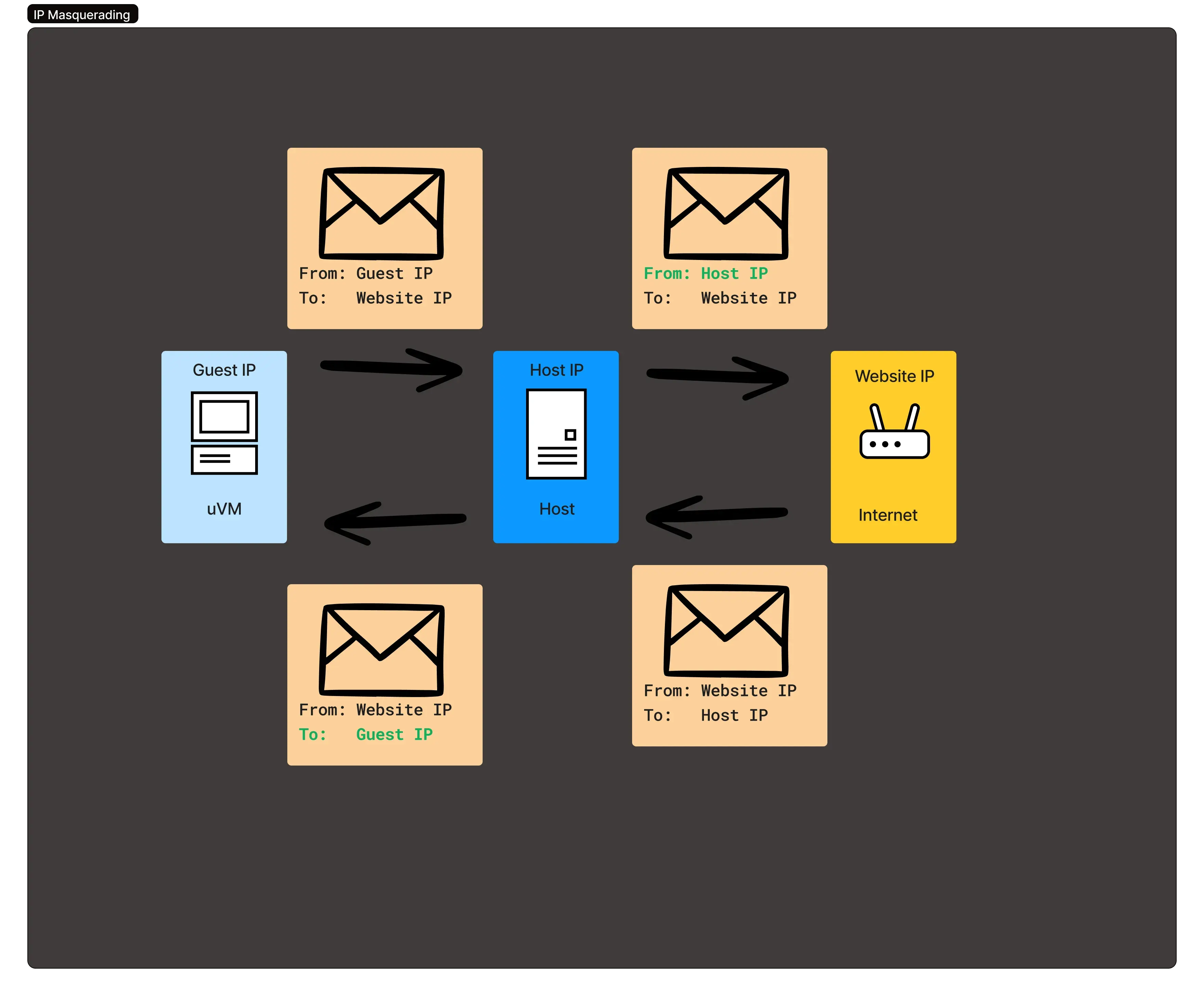

Masquerade traffic leaving the host

When packets leave the guest, they’ll have the guest IP address.

That doesn’t make sense once it leaves the host because the guest IP

address isn’t reachable so when the internet tries to send a

packet to the guest, it won’t know how to route it. To get around

this, we can ask iptables

to MASQUERADE packets leaving the host for the internet, which essentially

means replacing the ip address of the guest with the ip address of the host in

every packet. This allows routing to flow, with iptables swapping out the guest

& host ips as necessary to route traffic to the correct destination. This is a

little easier to see in a diagram

sudo iptables \

-t nat \

-A POSTROUTING \

-o ens4 \

-j MASQUERADEIn plain english, on the nat (Network Address Translation) table (-t nat), append a rule into the

postrouting chain (-A POSTROUTING) so that all packets leaving via ens4 (-o ens4) are masqueraded (-j MASQUERADE).

Bringing up networking within the guest

After the tap device is created & all the iptables rules are set, you also need to register the tap device with firecracker before you start the microVM. Lets update our vmconfig file so that we include the network-interfaces.

// /tmp/vmconfig.json

{

"boot-source": {

"kernel_image_path": "/tmp/kernel.bin",

"boot_args": "console=ttyS0 reboot=k panic=1 pci=off"

},

"drives": [

{

"drive_id": "rootfs",

"is_root_device": true,

"is_read_only": false,

"path_on_host": "/tmp/rootfs.ext4"

}

],

"network-interfaces": [

{

"iface_id": "net1",

"guest_mac": "06:00:AC:10:00:02",

"host_dev_name": "tap0"

}

]

}After you start the microVM you need to bring up networking within the guest. That’ll look something like this

ip addr add 10.200.0.2/24 dev eth0

ip link set eth0 up

# Remember, we gave our tap device the 10.200.0.1 IP address

ip route add default via 10.200.0.1 dev eth0

# Sanity check, ping google DNS servers

ping 8.8.8.8

# PING 8.8.8.8 (8.8.8.8): 56 data bytes

# 64 bytes from 8.8.8.8: seq=0 ttl=114 time=1.496 ms

# And on our host we can ping the uVM

ping 10.200.0.2

# PING 10.200.0.2 (10.200.0.2) 56(84) bytes of data.

# 64 bytes from 10.200.0.2: icmp_seq=1 ttl=64 time=0.960 msSuccess!

Automatically bringing the guest networking up

It’s not great that we have to have access to the guest via the serial terminal in order to start networking. Let’s configure a new initd service within our rootfs that will automatically bring the network!

First off there’s the service definition

#./etc/guest-networking

#!/sbin/openrc-run

start() {

einfo "Setting up guest internet"

eindent

local iface=eth0

local ip=10.200.0.2

local gateway=10.200.0.1

einfo "Setting up ip routing for $iface with ip $ip and gateway $gateway"

ip addr add $ip/24 dev $iface

ip link set $iface up

ip route add default via $gateway dev $iface

eoutdent

return 0

}

stop() {

return 0

}and update the Dockerfile so that openrc will automatically start our guest-networking service on boot.

- RUN rc-update add boot-arg-logger

+ RUN rc-update add boot-arg-logger \

+ && rc-update add guest-networkingThere’s a problem using this hard coded approach. What if we want more than 1 uVM? They’d try to assign themselves to the same IP address & that won’t work!. There are two ways to mitigate this:

- Spawn each firecracker instance in a network namespace

- Find a way to plumb in data to the networking setup.

While we’re going to explore network namespaces soon^tm, let’s try plumbing the data in for now. We saw in the last article that we can access the kernel boot arguments from our initd service, why don’t we pass in parameters there?

// ./vmconfig.json

"boot-source": {

"kernel_image_path": "/tmp/kernel.bin",

- "boot_args": "console=ttyS0 reboot=k panic=1 pci=off"

+ "boot_args": "console=ttyS0 reboot=k panic=1 pci=off IP_ADDRESS::10.200.0.2 IFACE::eth0 GATEWAY::10.200.0.1"

},

}# ./etc/init.d/guest-networking

- local iface=eth0

- local ip=10.200.0.2

- local gateway=10.200.0.1

+ einfo "Parsing cmdline $(cat /proc/cmdline)"

+ local ip=$(cat /proc/cmdline | sed -n 's/.*IP_ADDRESS::\([^ ]\+\).*/\1/p')

+ local iface=$(cat /proc/cmdline | sed -n 's/.*IFACE::\([^ ]\+\).*/\1/p')

+ local gateway=$(cat /proc/cmdline | sed -n 's/.*GATEWAY::\([^ ]\+\).*/\1/p')

+ if [ -z "$ip" ]; then

+ eerror "No IP address found in kernel command line"

+ eoutdent

+ return 1

+ fi

+ if [ -z "$iface" ]; then

+ eerror "No interface found in kernel command line"

+ eoutdent

+ return 1

+ fi

+ if [ -z "$gateway" ]; then

+ eerror "No gateway found in kernel command line"

+ eoutdent

+ return 1

+ fiAfter starting firecracker again, we see that our guest network is up & running again, except now it’s using the parameters we passed in via the kernel boot args. We can now modify that to spawn more uVM in parallel. The simplest case would be to dedicate each /24 CIDR block [i.e. 10.200.0.X, 10.200.1.X, 10.200.2.X] to each VM, although we be wasting 252 IPs per cidr block since we only need the tap device & the guest IP.

You can view code samples from this article on my github